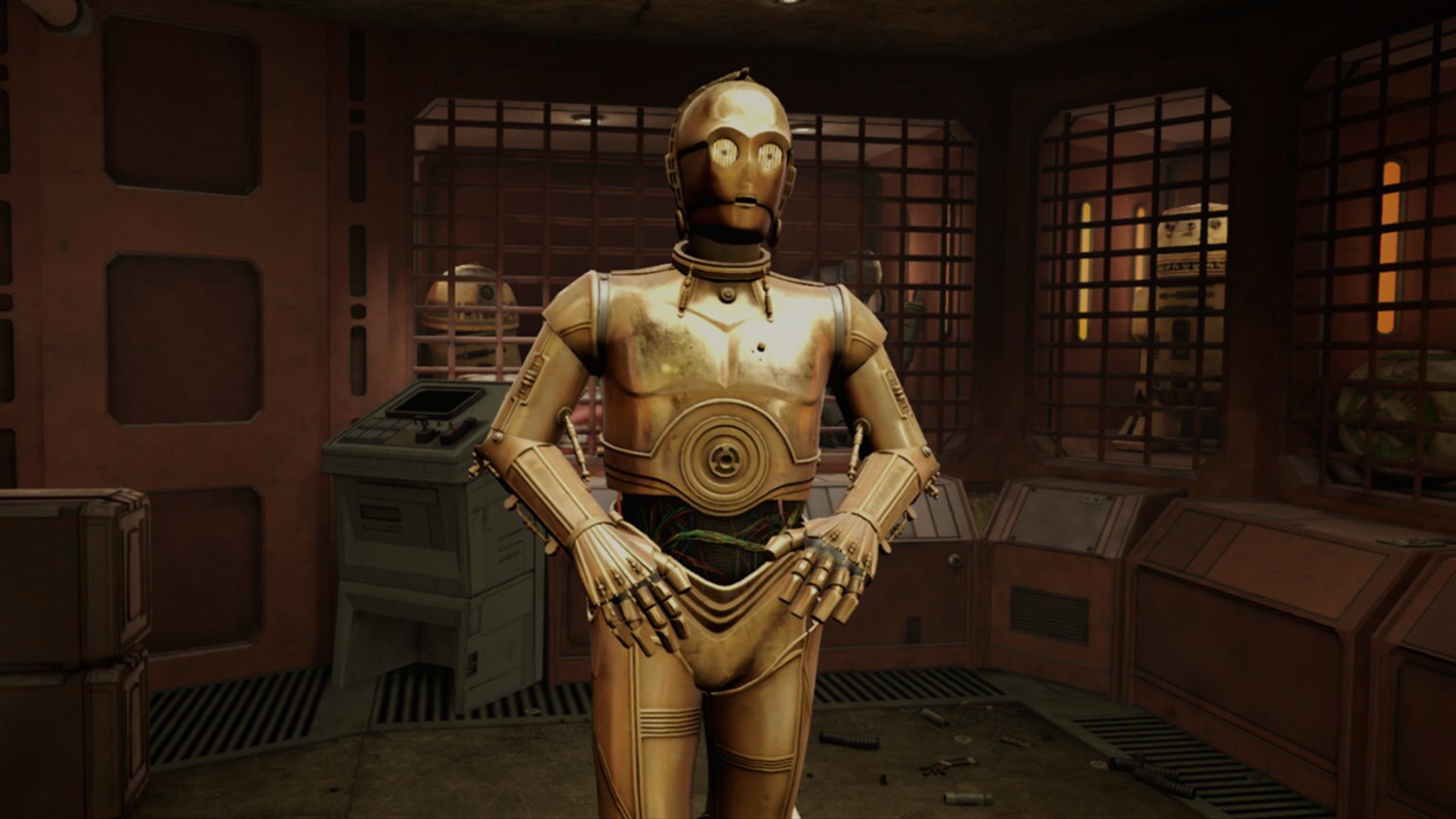

Today at the Games Developers Conference in San Francisco, Epic Games, in collaboration with NVIDIA and ILMxLAB, debuted a Star Wars short called "Reflections" featuring the ever-intimidating Captain Phasma and a couple of must-learn-to-keep-their-mouths-shut stormtroopers. You can watch it below. It's fun and looks beautiful; Phasma's armor shines and impresses, the high-tech look of First Order architecture is as imposing as ever. It looks so good that you'd be forgiven for thinking it was deleted scene from The Last Jedi. But it's not, and in some ways, it's actually more important: the video is CG and was made via the Unreal Engine using real-time ray tracing -- a holy grail for those creating high-end cinematic imagery, and it could change visual storytelling. StarWars.com caught up over e-mail with Mohen Leo, ILMxLAB director of content and platform strategy, and Jerome Platteaux, art director of Epic Games, to talk ray tracing, the making of the short, and what ramifications this technology might have.

StarWars.com: To put it simply, the graphics in this demo look real. The textures on the stormtrooper armor, their subtle head nods, the snow. Without giving away family secrets...how'd you do it?

Jerome Platteaux: We started with high-quality reference footage from ILM. Our artists faithfully recreated this detail digitally, from the scratches on the stormtrooper armor to the weave pattern on Phasma’s cloak. Our cinematic team suited up and recorded the actor performances in our motion capture studio. Set construction and shot work was assembled directly in Unreal Engine.

We designed materials with proper shading response, so they behave naturally to light. Most of the technology currently exists within Unreal Engine, today. But now with ray tracing, we can incorporate new effects that really breathe life into the scene.

Mohen Leo: The visual success of this project is due to ray tracing, which is used in film visual effects. Real-time content, like interactive virtual reality, are really catching up to the visual quality of film production. Interactive engines can use many of the same techniques we would use for effects in a movie. Now, real-time ray tracing can bring game engine content even closer to film quality.

StarWars.com: In layman's terms, what is ray tracing? What does it allow filmmakers to do?

Mohen Leo: Ray tracing is an advanced technique in computer graphics used in visual effects you'd see in any modern-day film today that allows us to more accurately simulate how light behaves in the real world. Lighting, shadows, and reflections all behave as they do in reality, so filmmakers can approach lighting a CG scene the same way they would on a movie set.

Jerome Platteaux: Unreal Engine creates a simulation, firing hundreds of millions of photons throughout our scene. The rays start at the camera, bounce around the environment, and ultimately arrive a light source. The lighting contribution is recorded and the result is displayed to the screen.

With ray tracing, challenging effects such as soft shadows and glossy reflections come naturally, greatly improving the realism of our scenes. Together with NVIDIA, we are using Microsoft’s DXR technology to create photorealistic feature film effects in real-time.

StarWars.com: What was the collaboration like in terms of ensuring authenticity and capturing little details, like Phasma's body language?

Mohen Leo: We worked closely with the team at Epic to help develop the concept and script, as well as accurately recreate the look of the environment, characters, and, of course, the performances. Phasma is a really popular character, so we went through many iterations to make sure we got the character right.

StarWars.com: What does this technology mean for the future of visual storytelling?

Jerome Platteaux: The ray-tracing technology has been used in the movie industry for several years and became the standard to generate images. Those images are taking several hours to render, but now with the ray-tracing technology implemented in Unreal Engine we can see those images rendered in real-time.

Mohen Leo: The ability to create this type of realism with real-time rendering will have a huge impact on filmmaking in the future. At the moment, visual effects is a long iterative process where the filmmaker gives feedback, but it takes the VFX team a day or two to address the notes and show the next version. In the future, filmmakers will be able to give creative direction and see results immediately. For ILMxLAB, where we focus on immersive real-time storytelling, this is a really exciting development, as well. It means that in a few years, our interactive experiences will look just as photo-real as the images you see on a movie screen.

Dan Brooks is Lucasfilm’s senior content strategist of online, the editor of StarWars.com, and a writer. He loves Star Wars, ELO, and the New York Rangers, Jets, and Yankees. Follow him on Twitter @dan_brooks where he rants about all these things.

Site tags: #StarWarsBlog, #ILMxLAB